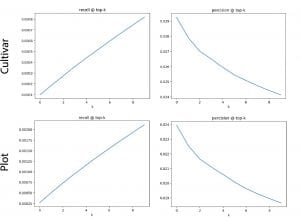

Here is the result:

Some details of training as a note:

Res50, Triplet, Large image, from both sensor, (depth, depth, reflection) as channels.

The recall by plot is pretty low as I thought. Since from the all-plot tSNE, it is kind of mass. But I think the plots do go somewhere. When we do tSNE on chosen several plots, some do separated. So they do go somewhere by something. Also there are some noise variables (wind for example) that we doesn't care may affect the apperance of leaves. So I think for this, we need to find a more specific metric to inspect and qualify the model. Maybe something like:

- Linear dependence between embedding space and ground truth measurement.

- Cluster distribution and variance.

I'm also trying to train one that the dimension of embedding space is 2. So that we may show the ability of the network directly on 2D space to see wheather there have something interesting.

Plot Meaning Embedding

I'm also building the network to embedding the abstract plot using the images and date. Some question arised when I implement it.

- Should it be trained with RGB images or depth?

- Which network structure/ How deep should it use as the image feature extractor?

- When training it, should the feature extractor be freezed or not?

My initial plan for this is using the RGB since the networks are pretrainied on RGB image. And use 3-4 layers of the res50 without freezing.