Glitter pieces which are measured off, predicted on, and the prediction follows what we would actually expect

There are quite a few pieces of glitter which are measured to have an intensity of 0 (or < 0.1 normalized) in the test image, but then predicted to have an intensity > 0.6 normalized. If we look at the intersection of the scan lines which correspond to the intensity plots of this centroid, we see that they intersect right around where the light was displayed on the monitor for the test image. So we would expect to see this centroid in our test image.

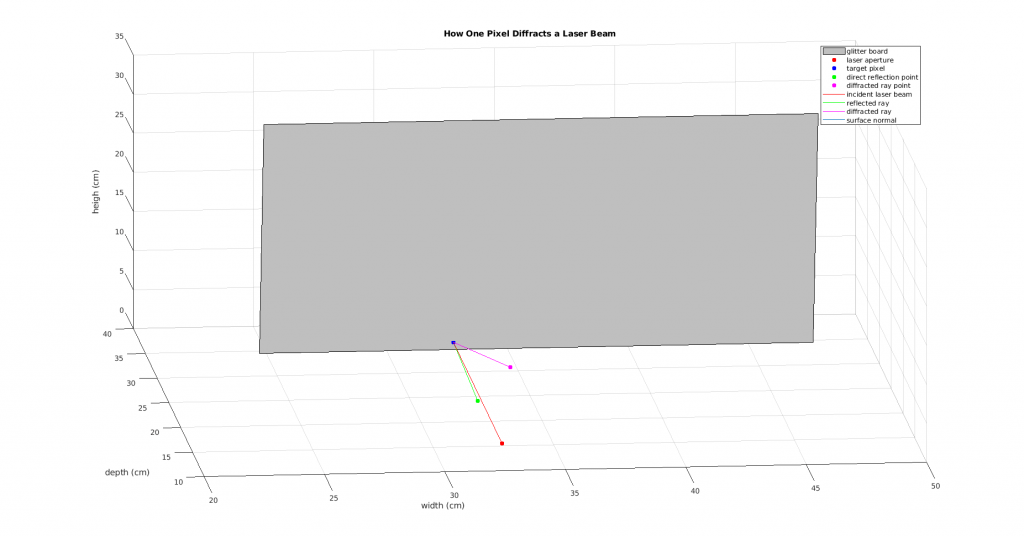

Below, I have shown the centroid on the image (pink dot), and we can verify that we don't see a glitter piece lit there, nor are there any lit pieces nearby. If there was another lit piece close to this centroid, we may believe that we just matched the centroid found in the test image to the wrong centroid from our glitter characterization. This does not seem to be the case though, so it's a bit of a mystery.

UPDATE: we have ideas 🙂

- threshold intensities the same way I did in my gaussian optimization (finding receptive fields) in the camera calibration error function

- throw out gaussians that are "bad"..."bad" can mean low eigenvalue ratio or intensity plots not well-matched

Aperture vs. Exposure

I played around with the aperture of the camera, and took many pictures with varying aperture - as the f-number decreases (aperture becomes larger), we see that a lot more light is being let in. As this happens, it seems that the center of a glitter piece slowly shifts, probably because if the exposure remains the same for all aperture settings, some images will have saturated pixels. The image below shoes multiple apertures (f/1.8, f/2, f/2.2, ..., f/9), where the exposure was kept the same in all of the images. We can see that in some of the smaller f-number images, there seem to be some saturated pixels.

After realizing that the exposure needed to be different as the aperture varies, I re-took the pictures with f-number f/1.8, f/4 and f/8, and varied the shutter speed and it looks like the shift happens less if image taken with some exposure and low f-number look similar in brightness to an image taken with some exposure and a higher f-number.

Next Steps

- fix the intensity plots in the camera calibration and re-run

- try throwing out weird-gaussian centroids and re-run calibration

- take iphone pictures with the XR, figure out which exposure works best with its aperture (f/1.8) so that we get images that look similar to our camera images (f/8, 1 second exposure)