The problem of common metric loss(triplet loss, contrast divergence and N-pair loss)

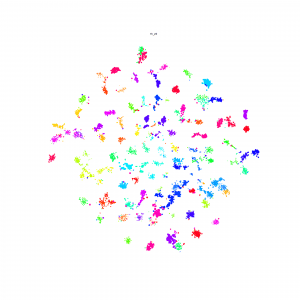

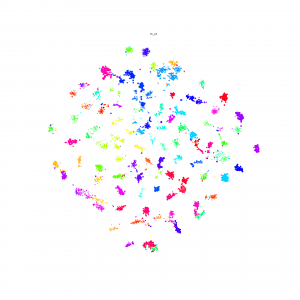

For a group of images belong to a same class, these loss functions will randomly sample 2 images from the group and force their dot-product to be large/euclidean distance to be small. And finally, their embedding points will be clustered in to circle shape. But in fact this circle shape is a really strong constraints to the embedding. There should be a more flexibility for them to be clustered such as star shape and strip shape or a group image in several clusters.

Nearest Neighbor Loss

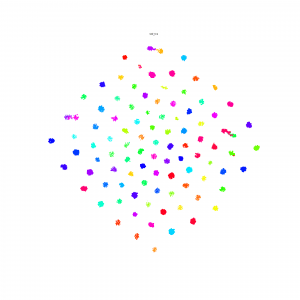

I design a new loss to loss the 'circle cluster' constraints. First, using the idea in the end of N-pair loss: constructing a batch of images with N class and each class contain M images.

1 finding the same label pair

When calculating the similarity for image i, label c, in the batch, I first find its nearest neighbor image with label c as the same label pair.

2.set the threshold for the same pair.

if the nearest neighbor image similarity is small than a threshold, then I don't construct the pair.

3.set the threshold for the diff pair.

With the same threshold, if the diff label image have similarity smaller than the threshold than it will not be push away.