February 21, 2018

Patient experience encompasses the range of interactions that patients have with their providers and healthcare system. There are many good reasons to measure and report on patient experience data and subsequently reward providers and hospitals for better performance. However, patient experience surveys and data collection need to be reliable and valid. Reliability and validity are critical because patient experience data are used for a variety of purposes including managerial decisions (e.g., credentialing, contract renewal, or incentive bonus programs) [1]. In addition, the Centers for Medicare & Medicaid Services (CMS) Hospital Value-Based Purchasing (VBP) plan identifies patient experience as a fundamental marker of value and integrates patient experience into public reporting. Patient experience data will become increasingly important with the implementation of Merit-Based Incentive Payment System (MIPS) under the Medicare Access and CHIP Reauthorization of 2015 (MACRA). In 2016, 44% of healthcare organizations reported having a Chief Experience Officer (CXO) added to their C-Suite with the underlying expectation to financially benefit from higher patient experience scores and positive publicity [2]. Currently, there are no patient experience mandates for emergency medicine. However, these are on the close horizon through the Emergency Department Consumer Assessment of Healthcare Providers and Systems or ‘EDCAHPS’).

A recent study by Pines et al. published in the Annals of Emergency Medicine sheds light on the current state of patient experience data reporting in emergency medicine. The study looked at month-to-month variability and construct validity in patient experience data gathered from 2012-2015 covering 1,758 facility-months and 10,328 physician-months using data from Press-Ganey Associates [5]. Using a retrospective cross-sectional study design, Dr. Pines and his team found that patient experience data varied greatly month-to-month with physician variability being considerably higher. They also found that facility-level scores have greater construct validity than physician-level ones.

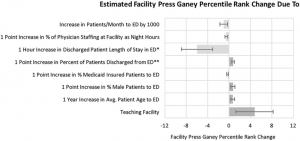

Figure 1: Estimated Facility Press Ganey Rank Changes

Across facility-months, 40.8% had greater than 10 points of percentile change, 14.7% changed greater than 20 points, and 4.4% changed greater than 30. For facility-level construct validity, several facility factors predicted higher scores: teaching status; elder, male, and discharged patients without Medicaid insurance; lower patient volume; less requirement for physician night coverage; and shorter lengths of stay for discharged patients.

Figure 2: Estimated Physician Press Ganey Rank Changes

Across physician-months, 31.9% changed greater than 20 points, 21.5% changed greater than 30, and 13.6% changed greater than 40. For physician-level construct validity, younger physician age, participating in satisfaction training, increasing relative value units per visit, more commercially insured patients, higher computed tomography or magnetic resonance imaging use, working during less crowded times, and fewer night shifts predicted higher scores.

“The concept of measuring patient experience and rewarding providers who deliver a better experience is absolutely right on. No one argues with that. Yet what we found is that the data currently being gathered is not particularly reliable nor valid.” – Dr. Jesse Pines [6]

Another concern was around the data collection process which results in low numbers of responses and low response rates. For this study, patient response rates fluctuated from 3.6 – 16%. Corresponding author Dr. Arvind Venkat remarked, “Imagine you conduct a survey, and only the very happy and very unhappy return their surveys. What you get is a very biased sample. That makes it difficult to come to any meaningful conclusions from the data [7].” The researchers proposed several approaches to potentially improve response rates, but more work needs to be done in this area to substantiate practice without affecting the validity of the survey.

Ultimately, the optimization of patient experience data gathering may reduce variability in ED patient experience data and better inform decision-making and quality measurement [8]. Yet, until a solution is found to improve reliability and validity, patient experience data – particularly at the provider-level – needs an overhaul. [9].

Ameer Khalek is a MPH student at the GWU Milken Institute School of Public Health